Detect Ping Pong Ball With Deep Learning

Tracking a ping pong ball with decent accuracy

Earlier I mentioned that the new direction of my project was to create a 3D reconstruction of a ping pong game using pose detection and ball tracking. My first step was to track the ball movement, and it looks like I did it (with decent accuracy)!

YOLO

You Only Look Once (YOLO) is a deep-learning architecture for object detection and tracking. The benefit of using this model over something like Fast R-CNN or other models is that it can run inference very fast (as the name implies, it only looks once).

I actually didn't realize that a whole auto referee system for ping pong was already created several years ago, as described in this paper: https://arxiv.org/pdf/2004.09927. Those guys, however, use a two-step system to detect the ball; I want to do it faster so that we can run the app on a smart phone.

YOLO is currently in V11. The python library makes it really easy to use as well, since I only need to specify my dataset directory and it will take care of the rest.

model = YOLO("yolo11n.pt")

results = model.train(

data="../dataset/opentt.yaml",

project="..",

epochs=300,

patience=10,

batch=-1,

imgsz=640

)The harder part was getting good data, but luckily the authors of the paper I mentioned above made their dataset open source. All I needed was a nice conversation with ChatGPT and we had could pull frames and annotations from the video!

You can look at the rest of the code, which I'm pretty proud of. This is actually my first deep learning project where I wrote my own code to train a model (even though I used YOLO).

Results

After 4.5 hours of training on my RTX 3070 Ti, I thought it was all a waste because the loss was really high and it automatically stopped at 46 epochs. Even worse, I tried testing with an image I took a while ago with James and it didn't work! But I figured out it was because the ball in that image was orange when I trained on only white balls.

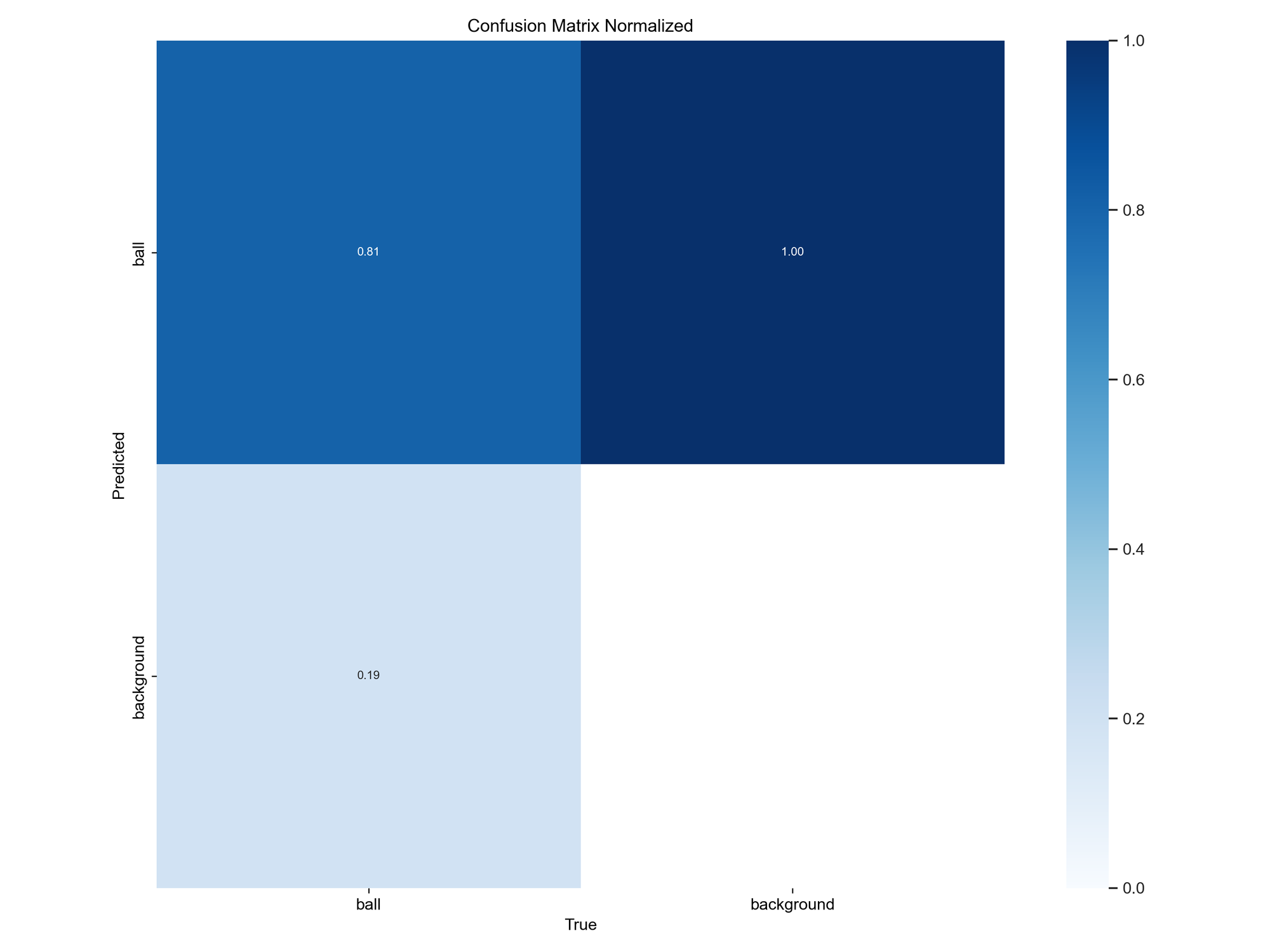

But the confusion matrix seems kind of promising. Looks like it can detect the ball about 80% of the time, which is not bad for my first model.

Conclusion

Yay, I made some good progress. My next step is to input a video and see whether it can consistently track the ball throughout each frame (and how fast). Then, I'll need to track the table and the people. So yeah I'd probably need to retrain my model for another 5 hours with more data 😭