Ping Pong iOS App

My first CCS Computing project: a computer vision Ping Pong scoreboard.

For my CCS Computing Lab class, I'm working on an app to automatically track and display ping pong scores on the phone (using computer vision). Right off the bat, however, there are a lot of problems to consider. First off, the ball can be hit at such high speeds that it would be impossible to capture precise movement data at 60fps. Also, you'd have to position the camera to perfectly capture the entire table, which may not necessarily be possible in all scenarios.

My First Prototype

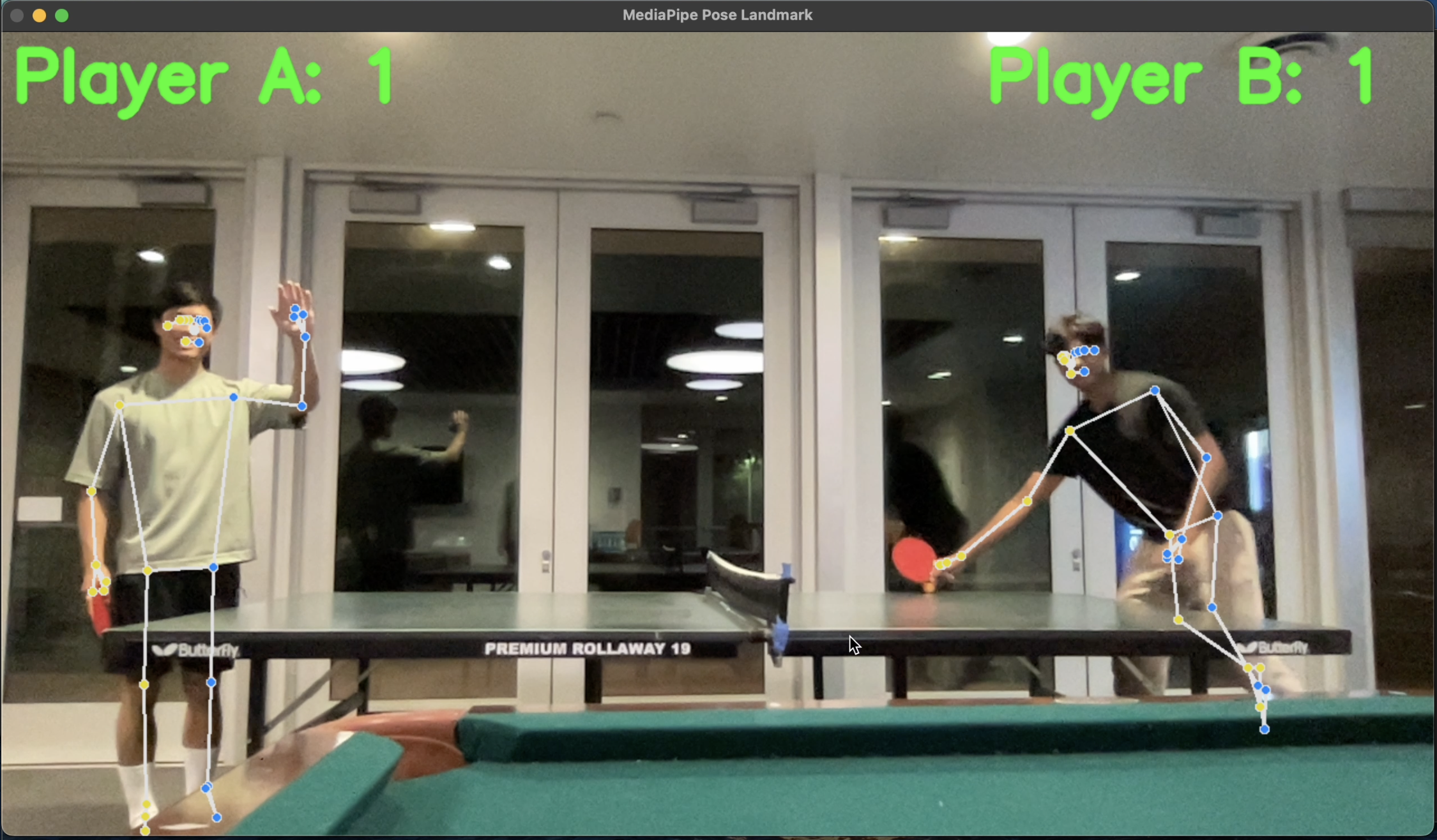

To combat these issues, I decided to track points in a simpler—though less automated—fashion. When a player wins a point, they simply raise their non-dominant hand to indicate that they've won.

Though this approach is much more basic, I think it works better in practice, even if there's a way to track the ball accurately. Considering how many edge cases there are for deciding who wins a rally, it's probably better to let the human decide, rather than hope that the AI will guess correctly; using the hand-raise method ensures that the players always have control over the score, not letting there be any mistakes that they'd have to manually override.

To test my idea, I built a quick prototype using Python and my Macbook webcam. The demo code uses OpenCV and Google's MediaPipe to detect the players and determine whether they've raised their hand or not.

After testing it at the downstairs ping pong table, it seems to work pretty well! Now time to build it for iOS.

Moving it to iOS

This step was a little harder since I have much less experience with Xcode and Swift, but I actually ended up learning a lot by forcing myself to build it here. When building previous Xcode projects, I had no idea what the MVVM (Model-View-ViewModel) or MVC (Model-ViewController) architectures were, so I got confused when I'd see so many different project formats. This time, however, I decided to learn one and stick with it. SwiftUI is a new framework that Apple recommends and uses the MVVM architecture, so I decided to go with that. It's actually pretty similar to React, where Views are like "dumb" components that render the props they're given, and ViewModels handle the backend logic. For MVC, ViewControllers are supposed to handle the logic and rendering at the same time, which can get messy.

Since SwiftUI is relatively newer and offers less control than UIKit (the previous framework), I spent most of my initial time trying to port Google's example iOS code for MediaPipe to work under SwiftUI. Luckily, the process wasn't that hard, as SwiftUI gives us a UIViewRepresentable class that allows you to run UIKit code in a SwiftUI app.

Once MediaPipe was set up, all I needed to do was write a callback function that processes the pose information, as seen below.

extension ScoreDetectionService: PoseLandmarkerServiceLiveStreamDelegate {

func poseLandmarkerService(_ poseLandmarkerService: PoseLandmarkerService, didFinishDetection result: ResultBundle?, error: Error?) {

// This delegate is called by the PoseLandmarkerService delegate, which processes data then gives it to us

guard let poseLandmarkerResult = result?.poseLandmarkerResults.first as? PoseLandmarkerResult else { return }

for playerLandmarks in poseLandmarkerResult.landmarks {

// Extract useful pose landmarks

let noseLandmark = playerLandmarks[0]

let leftWristLandmark = playerLandmarks[15]

let leftShoulderLandmark = playerLandmarks[11]

// Check which side of screen

let isLeftSide = noseLandmark.x < 0.5

// Check if raising hand

let isRaisingHand = leftWristLandmark.y >= leftShoulderLandmark.y

// Pass it to the corresponding player side hand detection service

if isLeftSide {

self.leftSidePlayer.update(isRaisingHand: isRaisingHand)

}

else {

self.rightSidePlayer.update(isRaisingHand: isRaisingHand)

}

}

}

}

This function will detect which side the player is on and whether or not they are raising their hand. We then update the player state to see if they've raised their hand long enough.

class PlayerHandDetectionService {

var handRaised: Bool = false

var handRaiseStartTime: Date = Date.distantFuture

var handRaiseCounted = false

var onPlayerWinsPoint: () -> Void

init(onPlayerWinsPoint: @escaping () -> Void) {

self.onPlayerWinsPoint = onPlayerWinsPoint

}

func update(isRaisingHand: Bool) {

// TODO: If we've been raising our hand long enough, give a point

// Get current time

let currentTime = Date()

// If raising hand, check if we passed the threshold

if isRaisingHand {

// Just started raising our hand

if !self.handRaised {

self.handRaised = true

self.handRaiseStartTime = currentTime

self.handRaiseCounted = false

}

// We've raised our hand long enough, give a point

else if !self.handRaiseCounted && currentTime.timeIntervalSince(self.handRaiseStartTime) >= DefaultConstants.handRaiseDurationThreshold {

self.onPlayerWinsPoint()

self.handRaiseCounted = true

}

}

// Else, reset hand check state

else {

self.handRaiseStartTime = Date.distantFuture

self.handRaised = false

self.handRaiseCounted = false

}

}

}

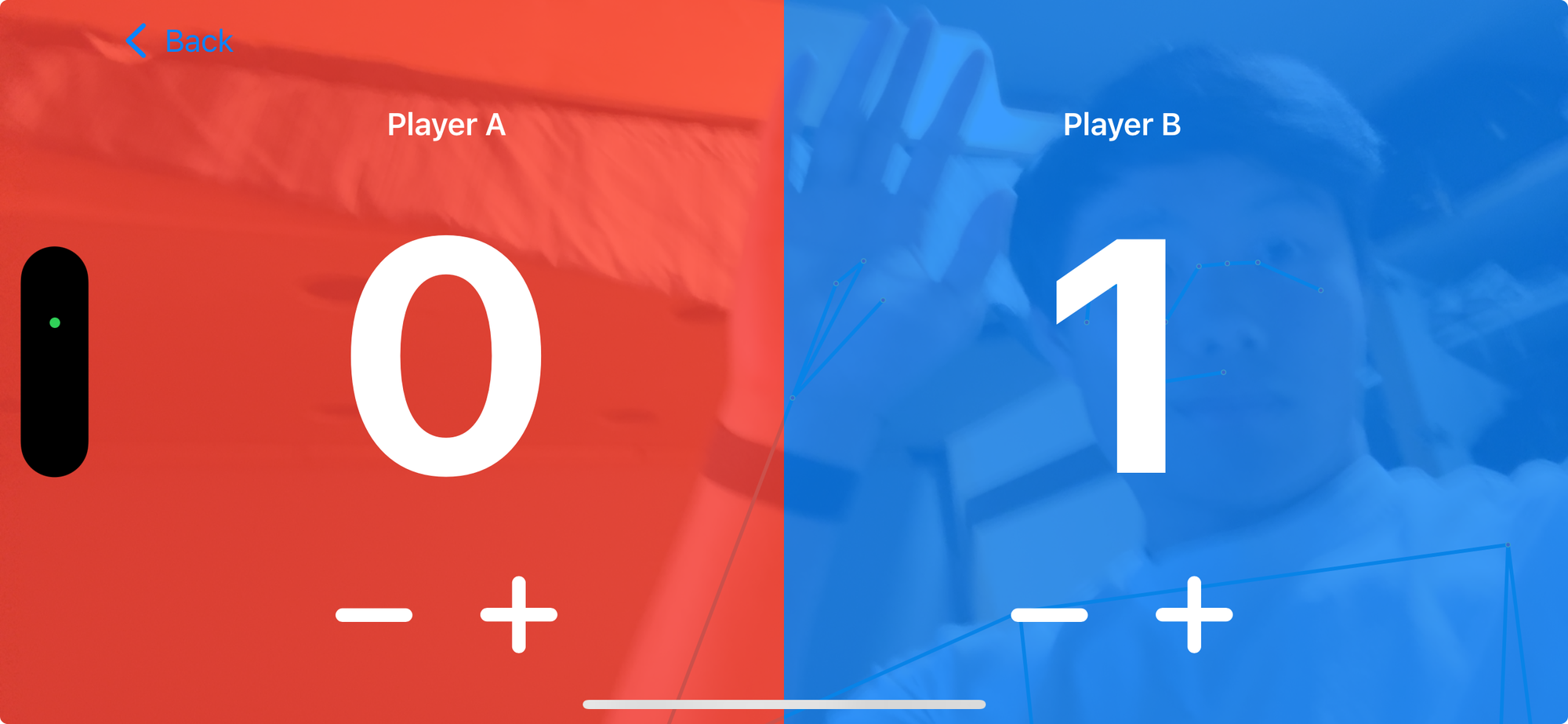

And the result works pretty well!

Next Steps

I actually ended up testing the app and it seems to work fine. The only problem is that no one wants to raise their hand, either because it's awkward or just annoying.

For my next steps, I have two ideas:

- The logical next step is to make the app automatically detect the points (despite the challenges I described earlier). Someone on YouTube kind of got this working, so it's definitely possible.

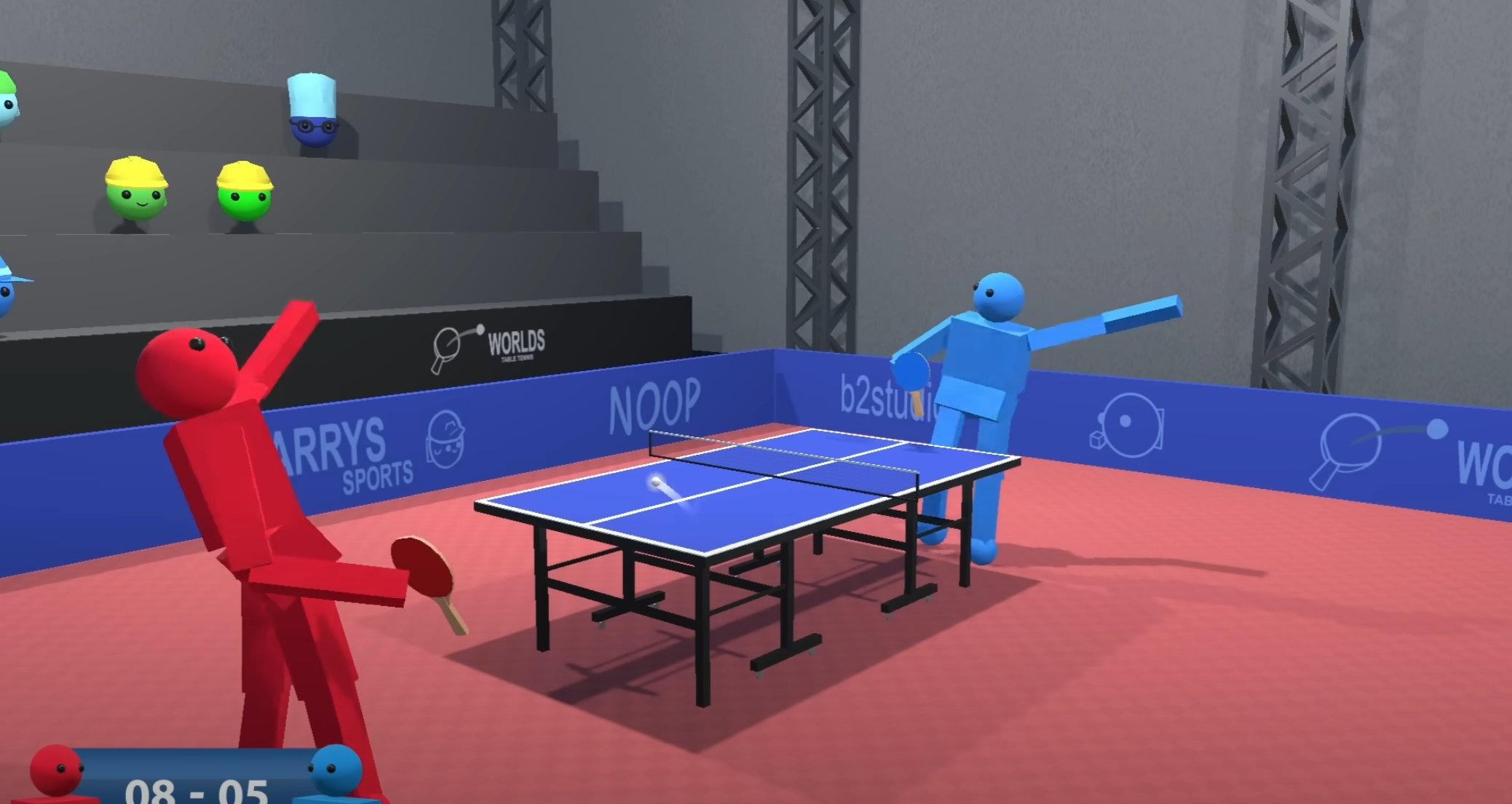

- Perhaps more interesting, I want to build an app that will "transcribe" the game into a full 3D reconstructed live scene, where the player can go back and analyze their form and see where they can improve. I'll do this by detecting where in space each item is at every frame, then rendering that into a 3D scene with something like Three.js or Unity. I was thinking of using low poly models to make everything look more cohesive.

For my second idea, I also want to try implementing a deep learning model that will test your form against professional players' forms that hit the ball in a similar situation; thus you can see how to improve. To go even further, an LLM could teach you specific steps/advice to achieve better form.

Overall, I think my next steps will be way harder and more ambitious, but hey, that sounds more fun anyway.