SLAM-TT: Visualize Ping Pong in 3D!

CCS 1L Fall Project. View real ping pong games in 3D.

I finished this project last quarter and I lowkey forgot to write a post. Here's the repo:

Looking back, the video is cringey and too long. Too bad we're not making one for this quarter; I had some fire ideas for my VR Chinese game.

How I Built It

Since I have a lot of stuff written in the README of my GitHub repo, I'll do a very high level overview.

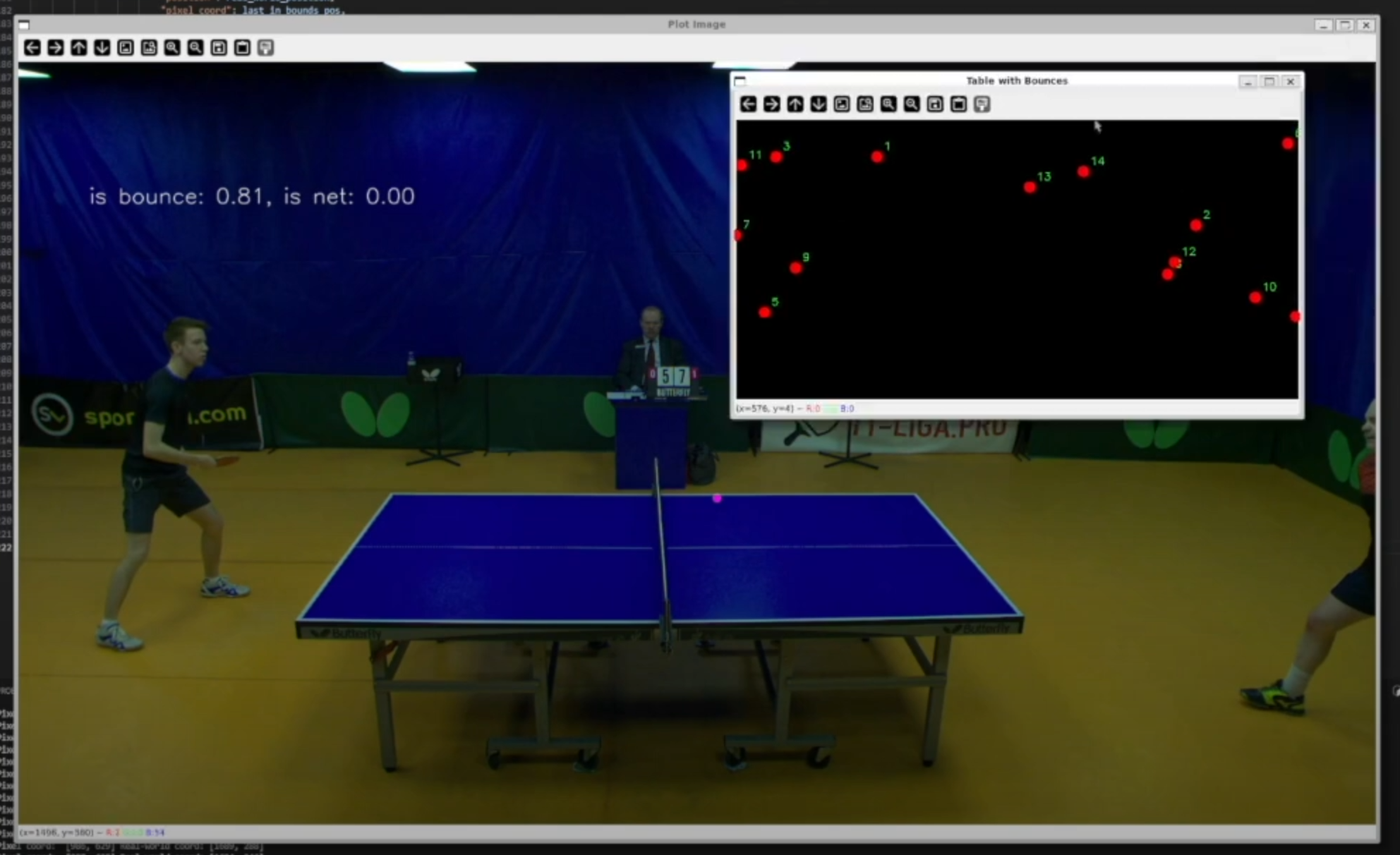

TTNet

This project provides me with data and PyTorch code to train a vision model to detect the ball position at each frame. I also get the table boundaries and whether it thinks the ball is currently touching the table.

I first detect all ball bounces, then use homography to map the frame coordinates into real world coordinates (relative to the table). We can use this data to reconstruct the ball position at every frame.

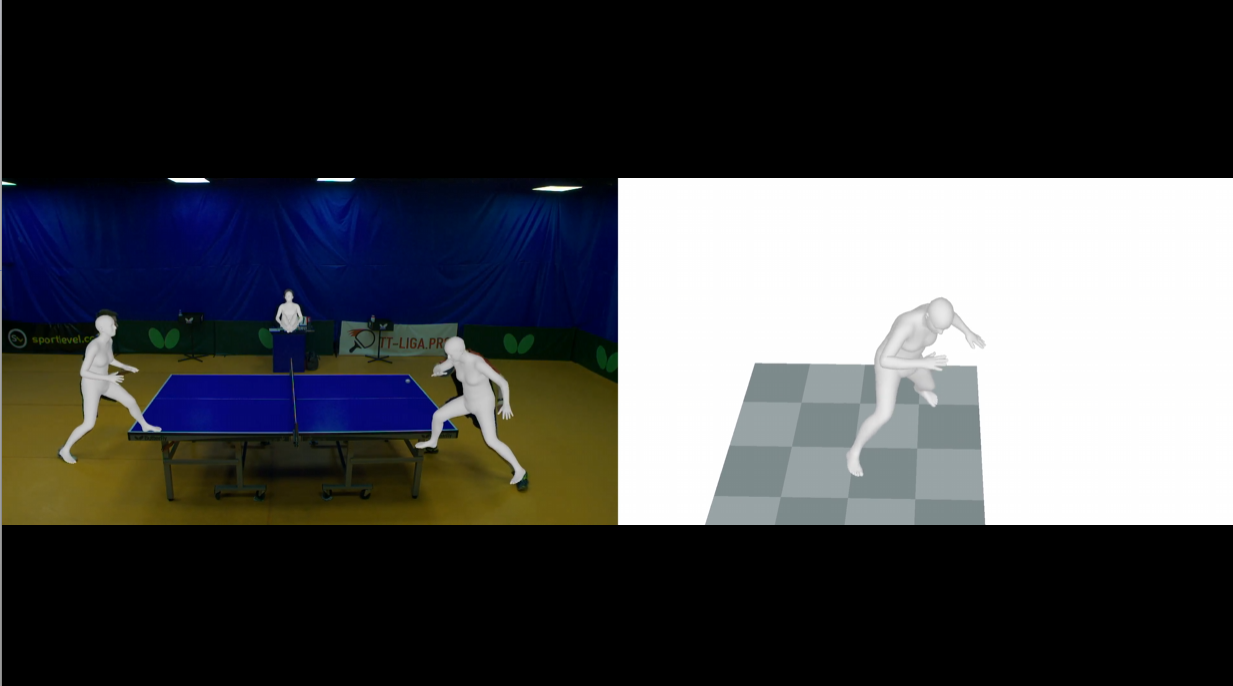

WHAM

This project takes videos of humans and finds their pose and world position. I use this to accurately get the player's movements and strokes.

Luckily, we can export the result into Blender, which I then load the animation and export it into Unity for final processing.

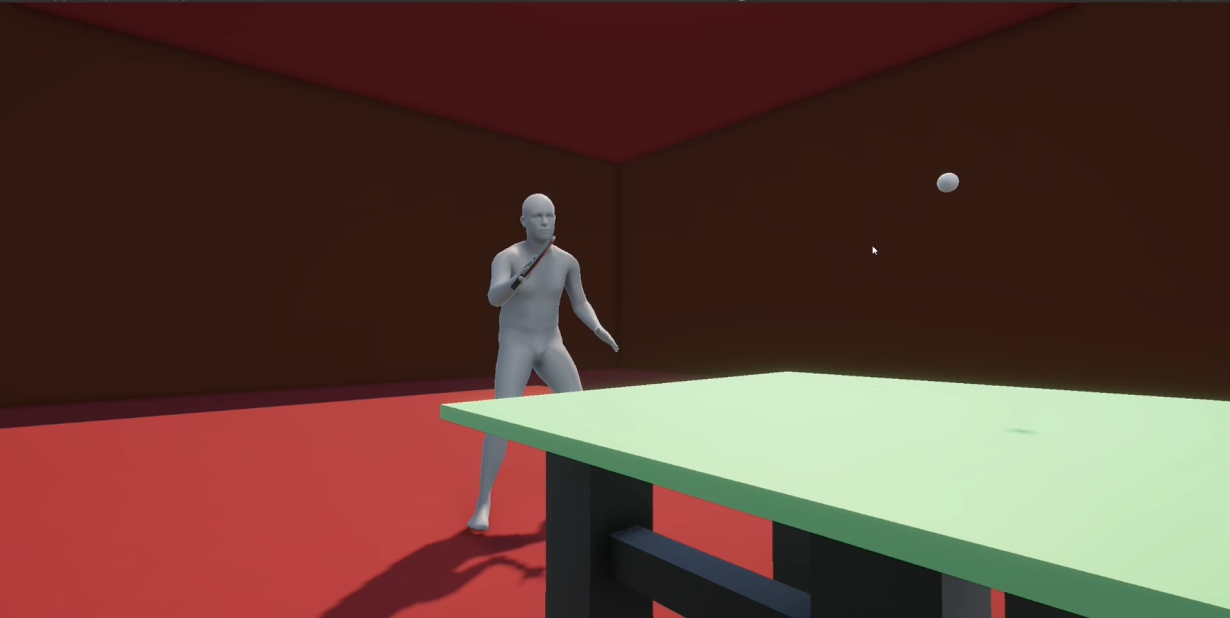

Unity

Loads bounce data and player animations for the final result! I kind of cheat by lerping bounces instead of actually "simulating" them with physics or whatever. For this application, I think it's fine, but it could be worth exploring in the future.

Conclusion

If I feel like it, I'll update this post with more visuals. I put a lot more effort into the project than it seems at first glance. Checkout the video if you didn't see it above.